Personalized Hand Models for VR

For a truly immersive experience

I've always preferred story-driven games to the competitive ones. When diving into a game like this, one experiences a variety of emotions and may also grow as a person at the end. Despite all that I came to the realization that we might control these characters — our avatars in a way—, but in effect, they have nothing to do with us!

When playing a game, you see the body and hands of your playable avatar instead of your own. You can customize your character in some MMORPGs, but it still requires heavy manual tweaking to make them look like you.

This is even more relevant in virtual reality, where the control is, literally, in the player's hands. Hand-object interaction is in the center of a great virtual reality experience.

Seeing someone else's hands move instead of your own feels uncanny and causes cognitive dissonance. Although this sounds like a small detail, it can really break the immersion, especially in VR. To work around this, most games apply clever tricks, like covering the player's hands or showing gloves instead.

However, hands differ in shapes and sizes, so these workarounds only offer partial solutions to the problem.

Hands in The Gallery - Episode 1: Call of the Starseed

With my project, I aim to change the character's body to resemble the player's physical image in order to make gaming a truly personal experience, thus making it more immersive.

I want to transform the character's body to resemble the player's own physical characteristics, making games a truly personal experience.

Recent advancements in Artificial Intelligence made it possible to take personalization to the next level, whether generating voice on the fly (TTS) or modeling the player's face from a single image.

Online Demo

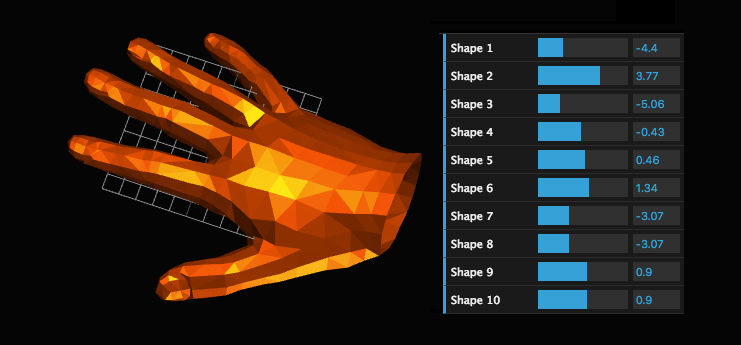

Take a look at my interactive demo here. You can change the shape of the hand by adjusting the sliders on the right.

Try finding the right parameters for your hand, let's see if you can.😉

Leave a comment with your results.

Deformable Hand Demo

The Model

As a starting point, I'm using the Mano model. It is a parametric hand model published by the Perceiving Systems Department in Tübingen.

I'll get into more details in the following article. If you are interested, make sure you've clicked the Follow button next to my name.

Researchers from PSD published all the data accompanying their high-quality paper which is still very rare these days.

There is a catch, however. They still use Python 2 and therefore the data is in a Pickle format incompatible for Python 3, that I'm currently using. Furthermore, the structure of the files was "documented" only by an example code. I had to figure out the purpose of each matrix by their dimensions and arbitrary names.

All in all, I came to the conclusion that I needed to export the values as JSON files to maintain cross-compatibility between various programming languages.

Skeleton with Blend Weights

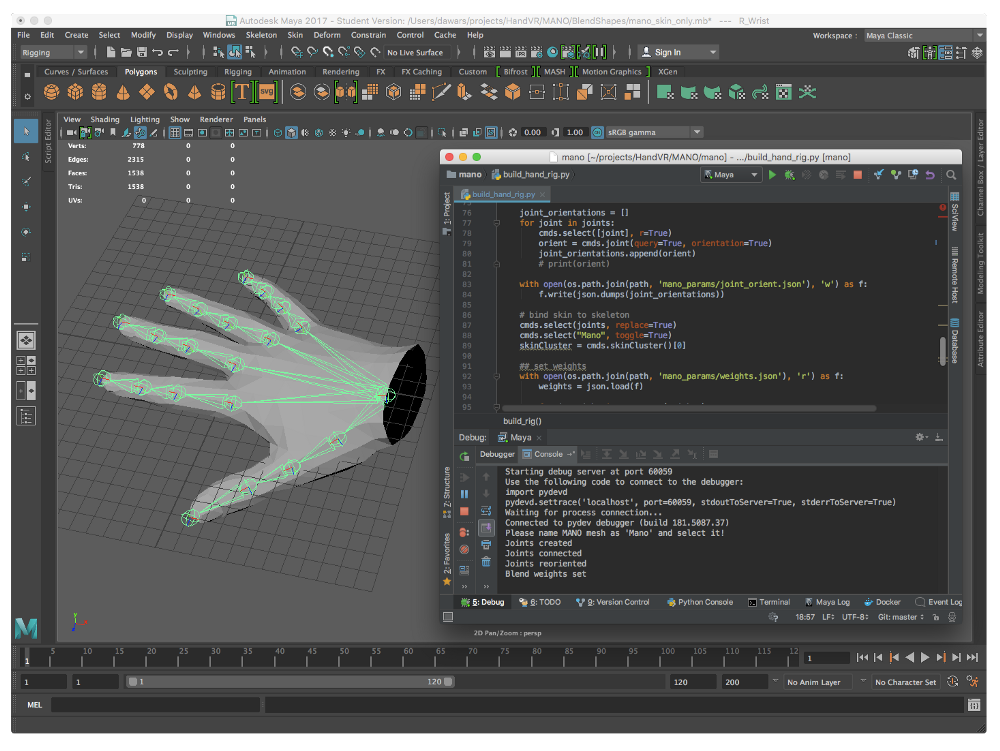

Based on the published files I've rebuilt the whole model in Maya to be able to export to different game engines.

I managed to set up the blend shapes by hand but that was not an option for the rig. I would have had to manually set blend weights for every one of the 778 vertices for every 16 joints.

Fortunately, I found a better way, scripting! Maya supports two languages for scripting: MEL and Python. (That means Python)

There is a plugin for my favorite IDE, PyCharm, which connects to the Python interpreter run by Maya and executes code on it. Now I can use those matrices I saved earlier. This made the whole process a very pleasant experience.

Executing Python code in Maya

Leap Motion

To see if everything worked correctly I imported the model in Unity and paired it up with Leap Motion. Leap Motion is a small device that can track your hand movements in real-time.

Unfortunately, it is not precise enough to be able to track arbitrary hand poses, but a fun toy nevertheless. This is due to the model Leap Motion uses which prefers more common gestures. At the end of the video, I touch each of my fingertips with my thumb, but they don't move separately and don't make contact.

Leap Motion joint orientations (Source)

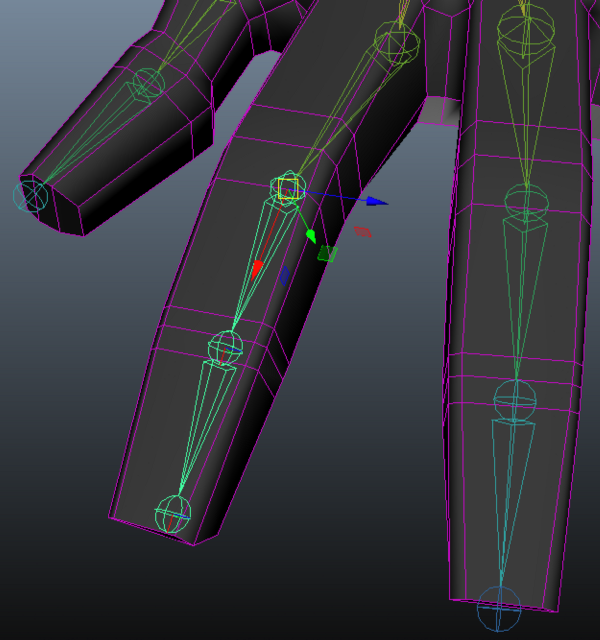

However, the setup was not as easy as it sounds. Leap Motion requires the joints of the skeleton to be oriented in a certain way.

One axis along the bone, another through the axis of rotation and the third one to complete an orthonormal base.

Luckily Maya has the Orient Joint feature that does just that. It was a great time saver and I could even call it from code.

After that, it was easy pairing the rig with the Leap Motion controller using their auto rig tool.

Goal

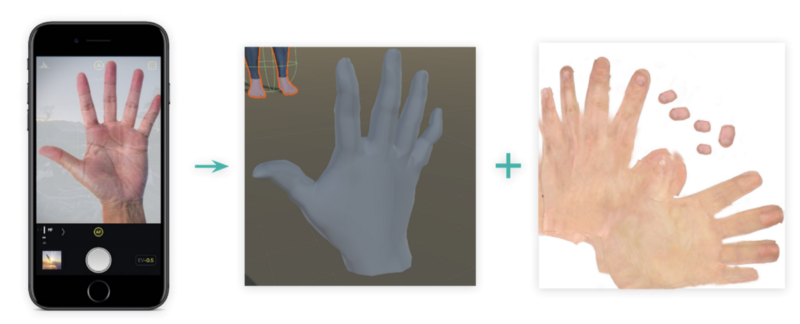

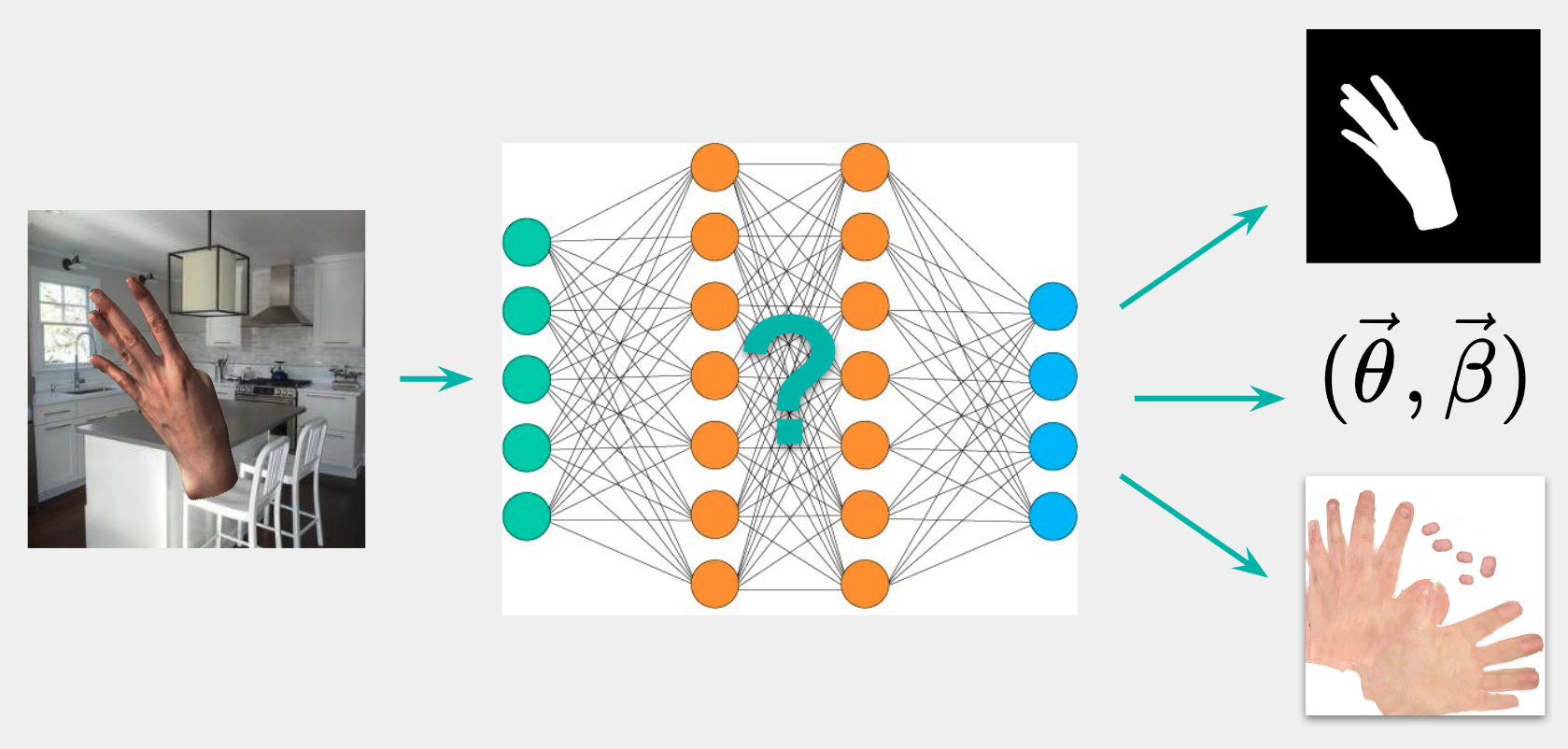

The end result of this project will be a mobile app that can transform hand photos into a textured 3D mesh consisting of 10 shape parameters and the different texture files (albedo, normal, etc). These will be extracted with Convolutional Neural Networks (CNN), which is the state of the art method for image processing tasks. These will then be transferred to a supported game of choice. The model can be animated with conventional methods thanks to the MANO model we use as a base.

Mobile app concept

Whether the computations run on the device or in the cloud will depend on the computational need of the network. On-device inference would be beneficial for privacy reasons because hand data can be used for biometric identification, therefore, it should be treated with extra care. Not to mention the data policy imposed by GDPR for European users. This way the data never leaves the phone complying with the new regulations.

What's next?

Currently, I'm finishing up cleaning the training data and starting to train a neural network. In the next post, I'll write about the results of the first prototype which will estimate the shape parameters and what I've learned along the way. In the meantime follow me to get notified when it comes out.

If you liked this article, follow me on medium and follow me on social media.

Never miss anything! I write about the intersection of Machine Learning and Computer Graphics.