Multi reference

Deepremaster

Paper: https://github.com/satoshiiizuka/siggraphasia2019_remastering

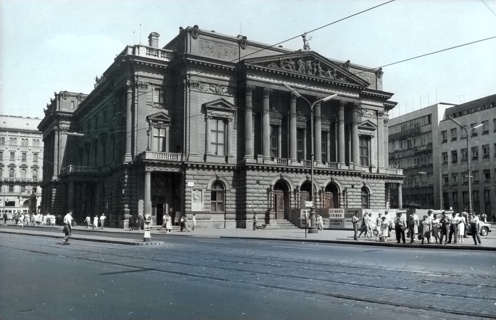

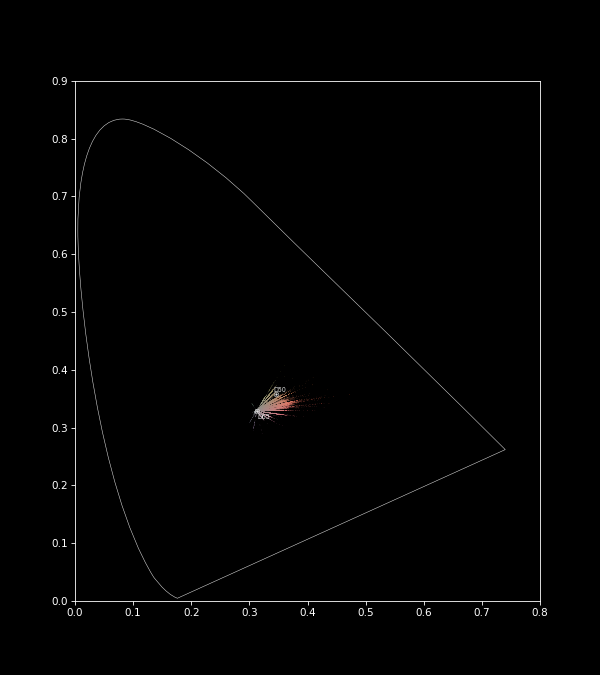

This model is originally designed for film colorization. To run this benchmark the input image is duplicated 5 times. The reference images are supposed to be colored frames chosen from the movies.

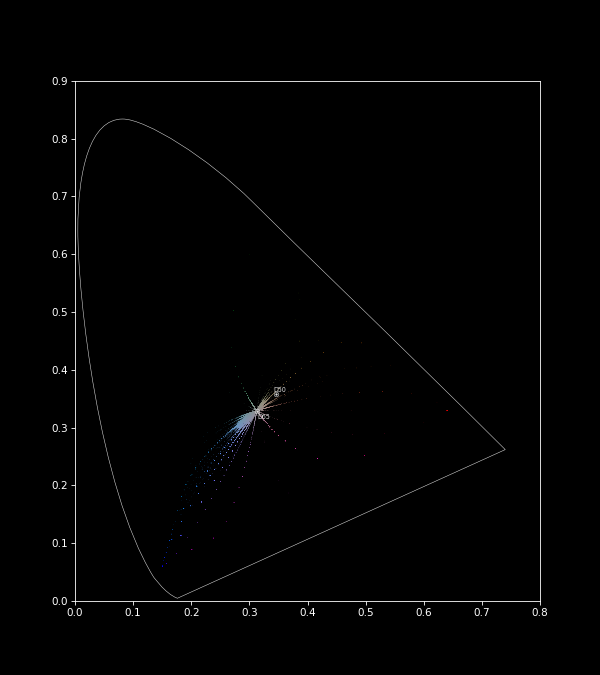

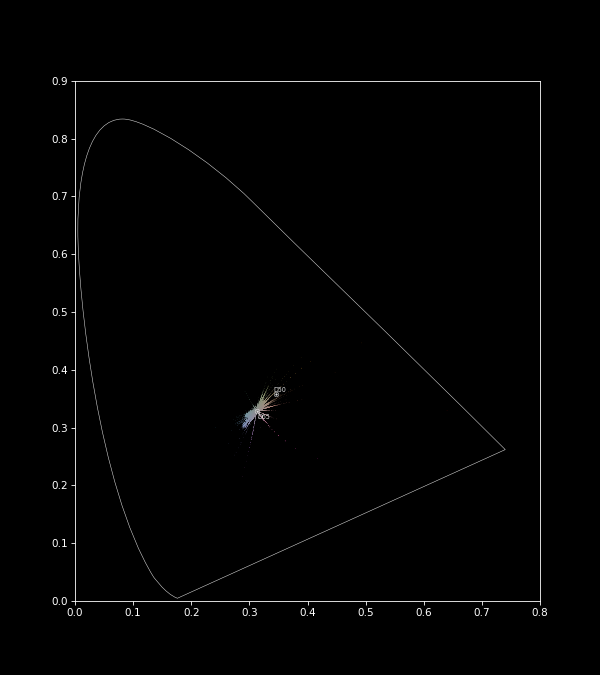

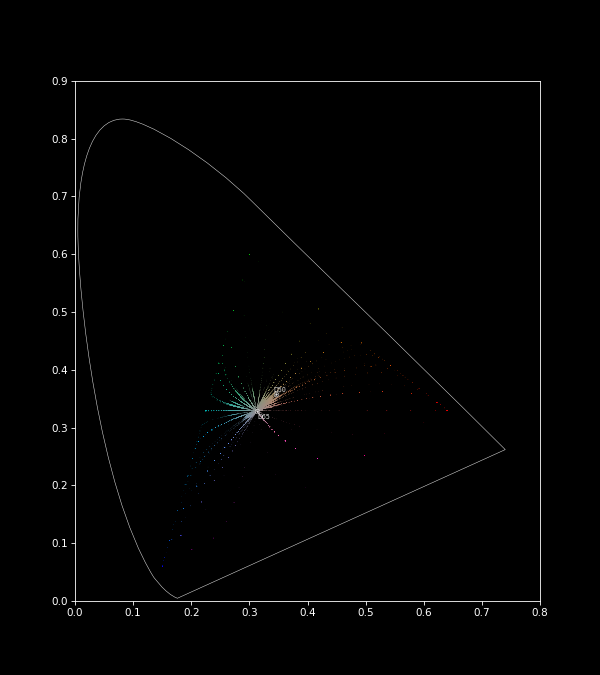

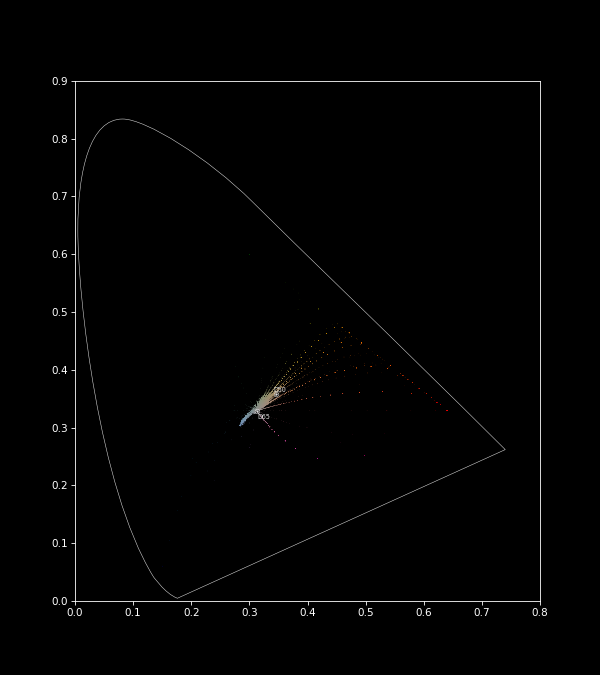

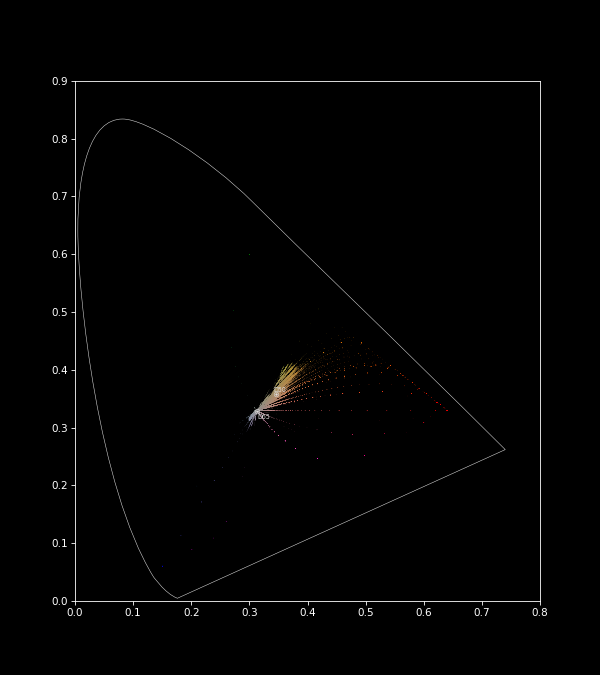

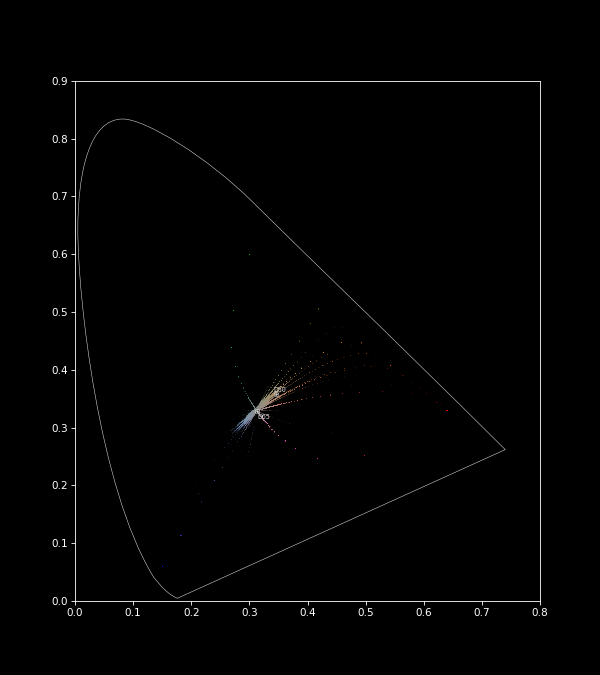

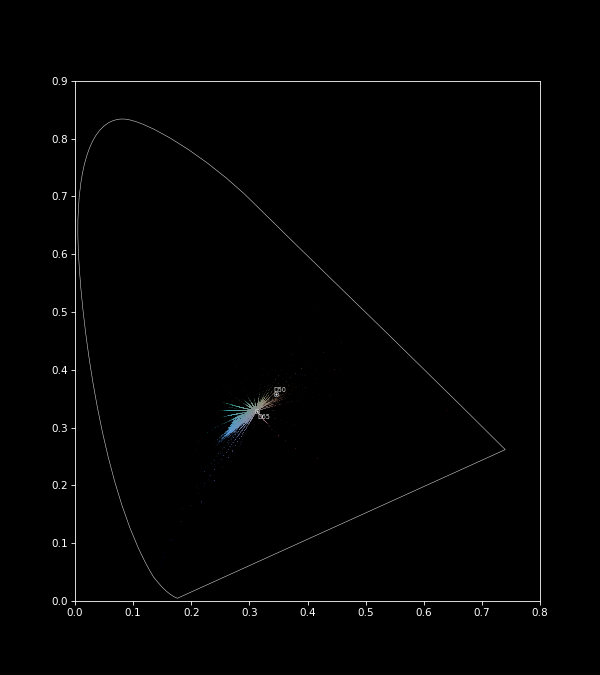

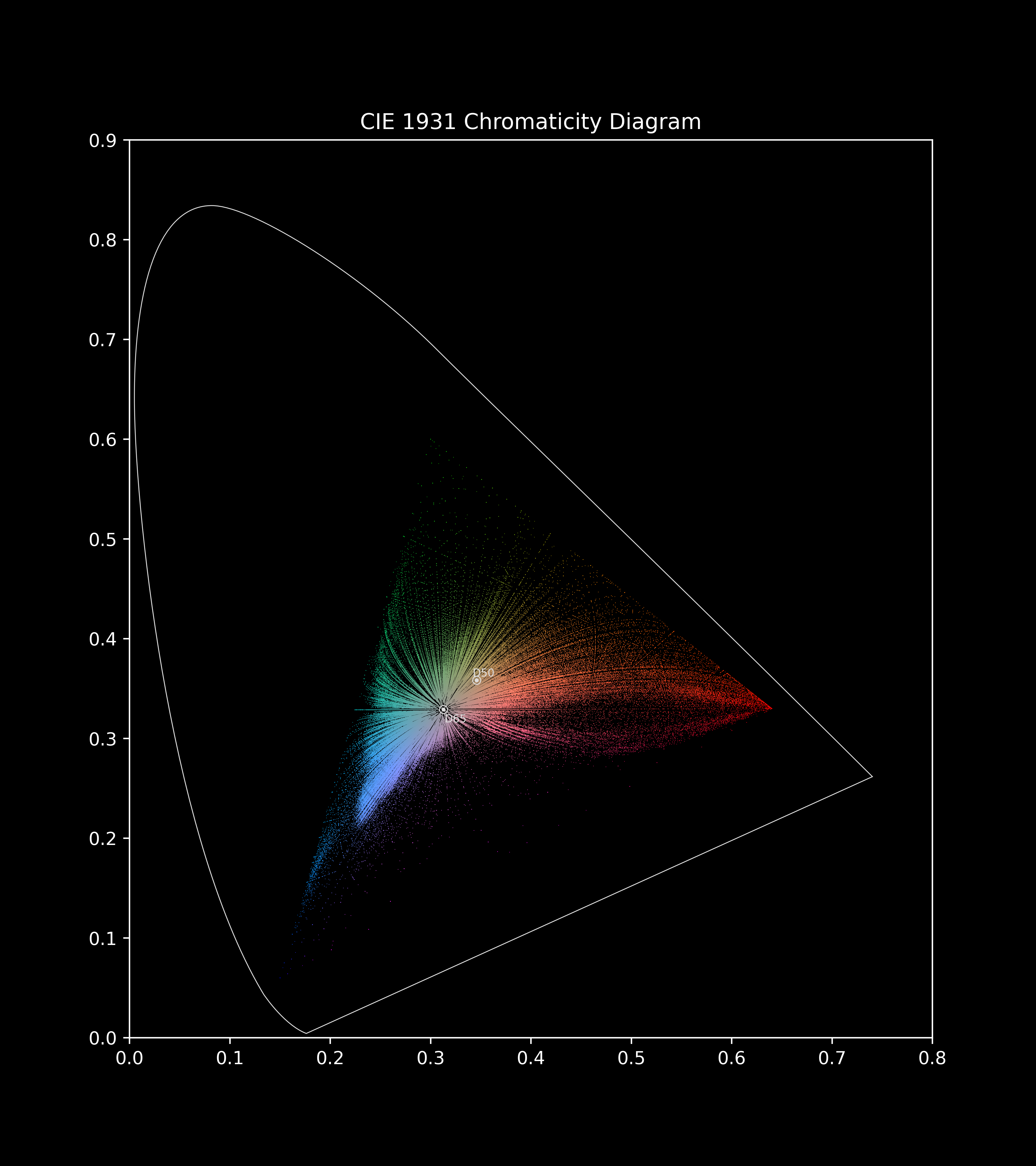

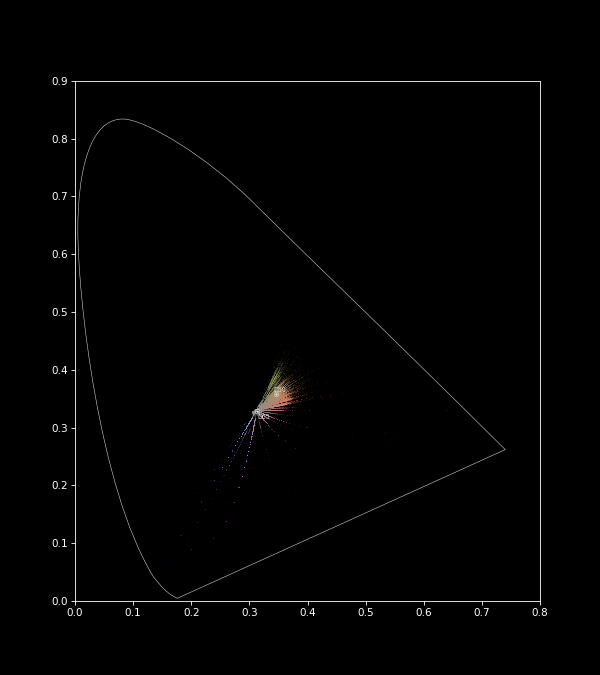

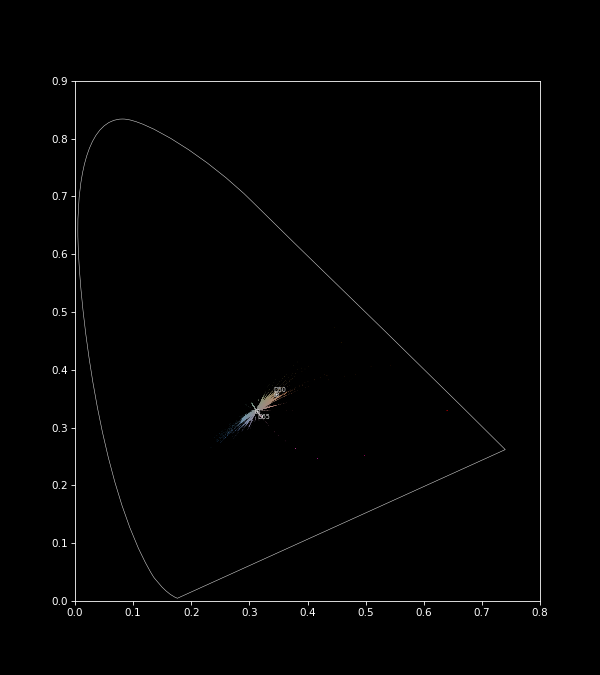

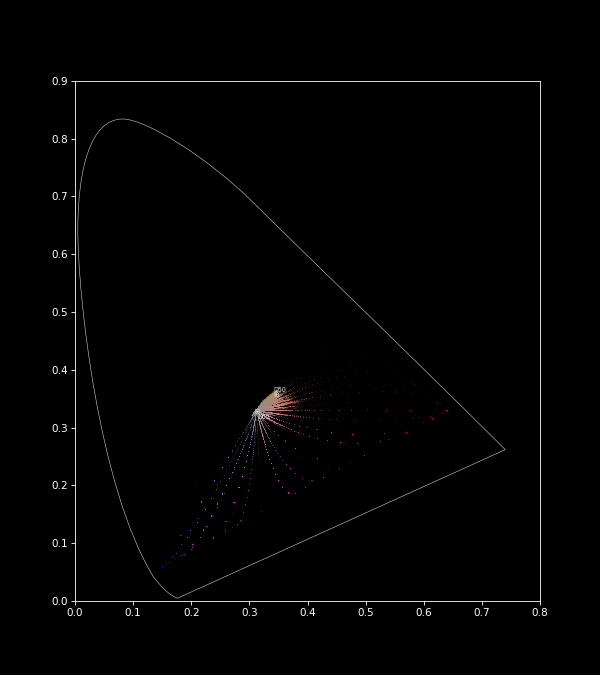

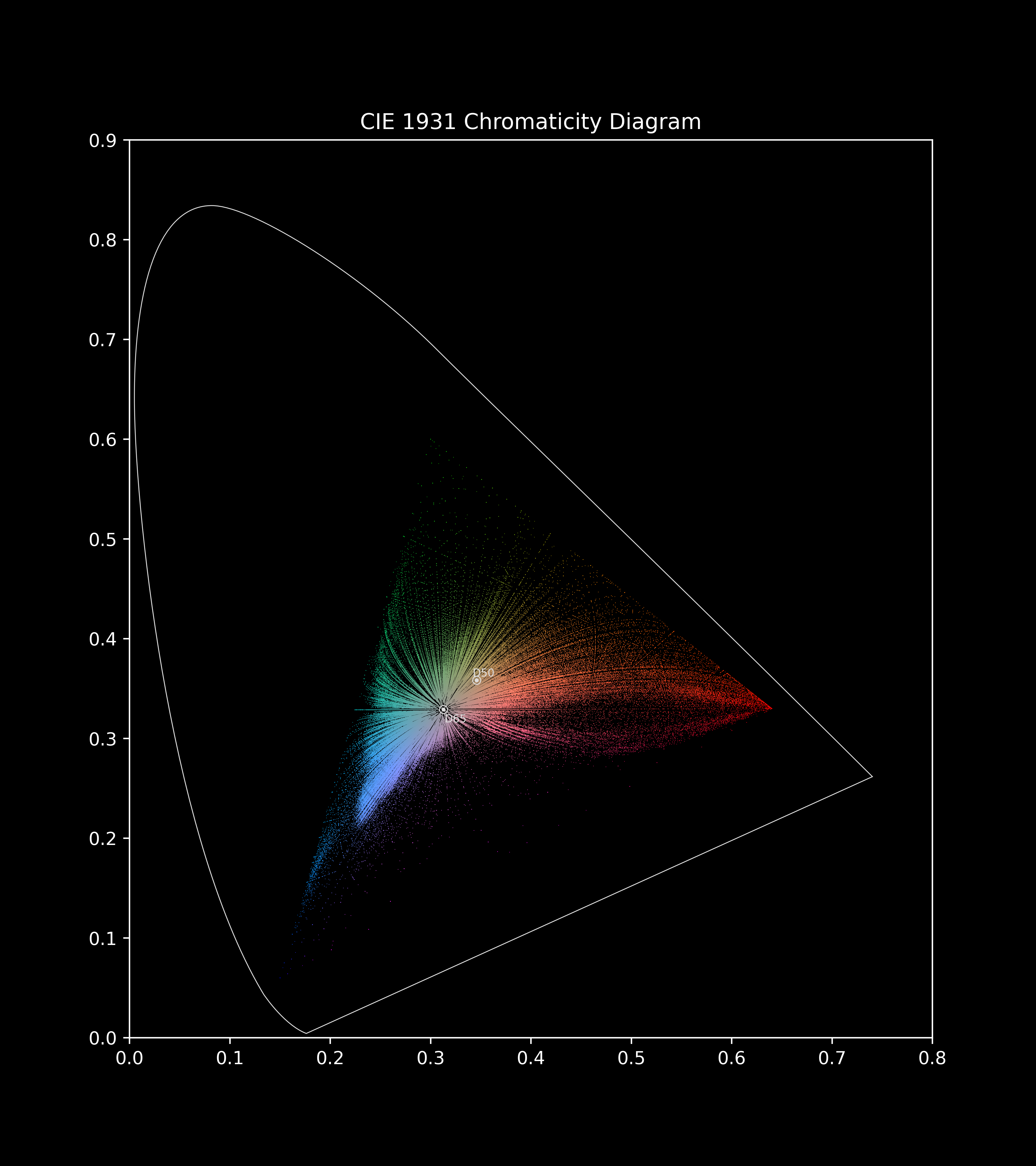

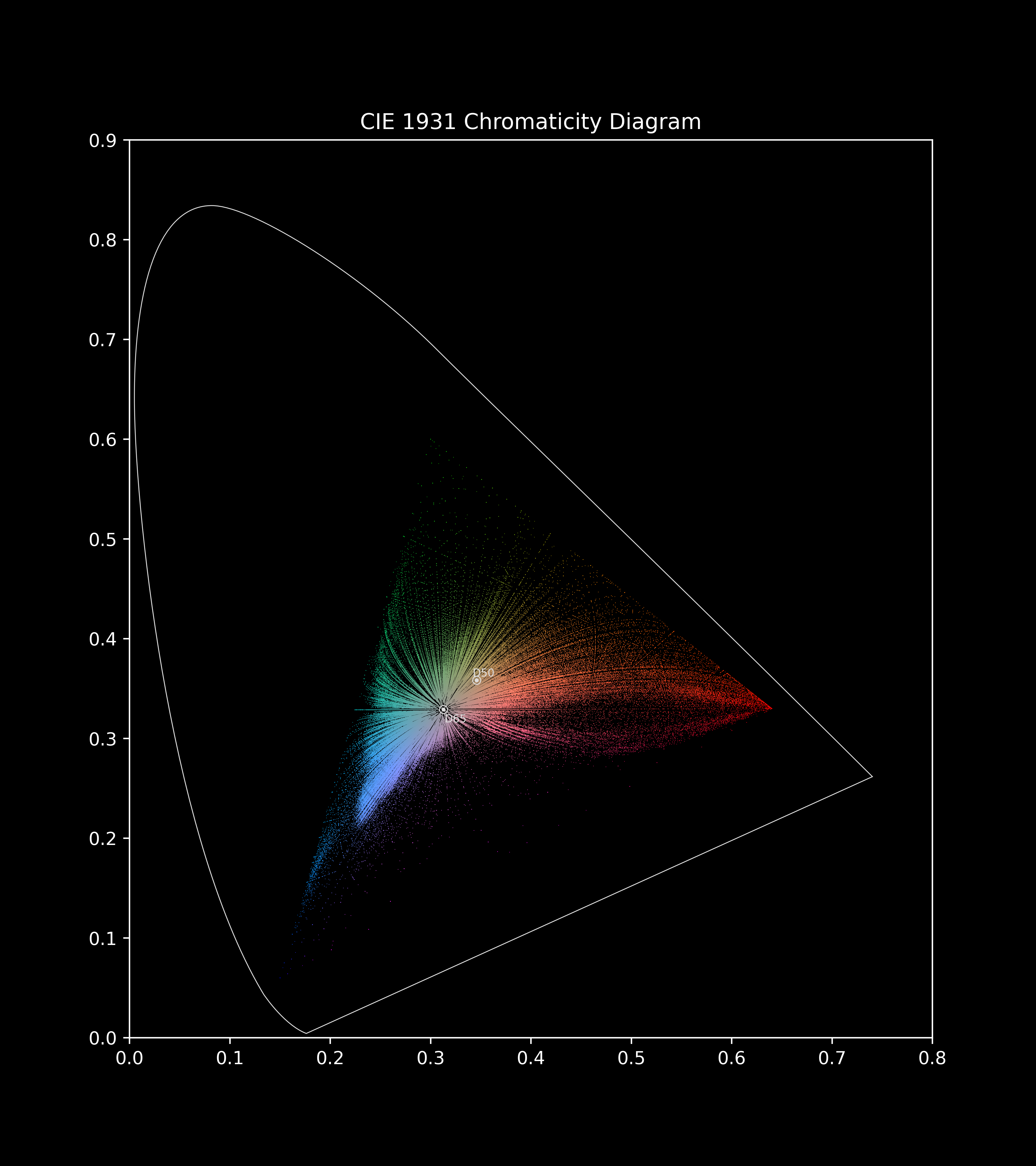

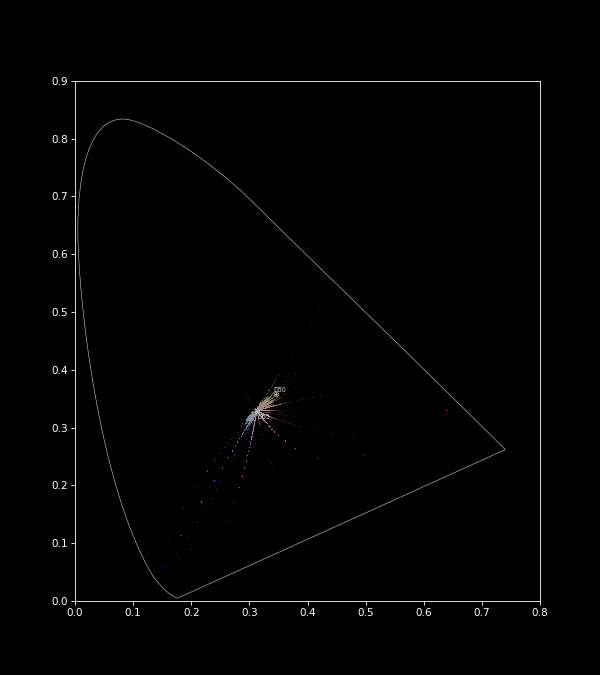

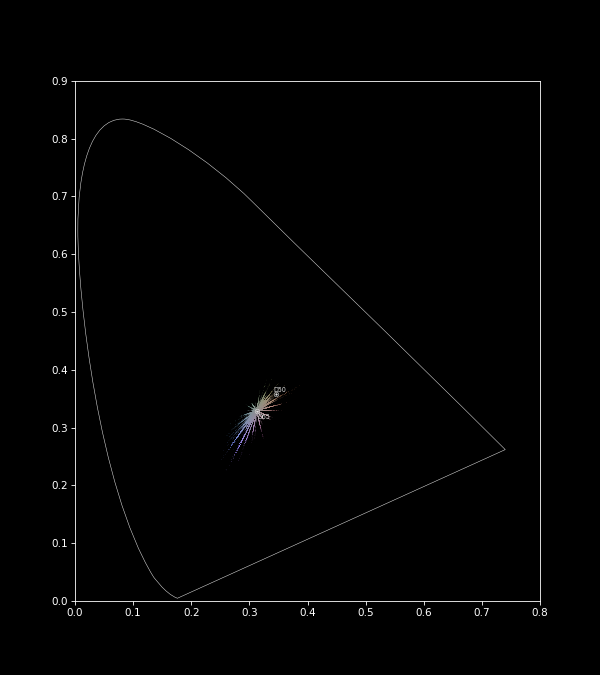

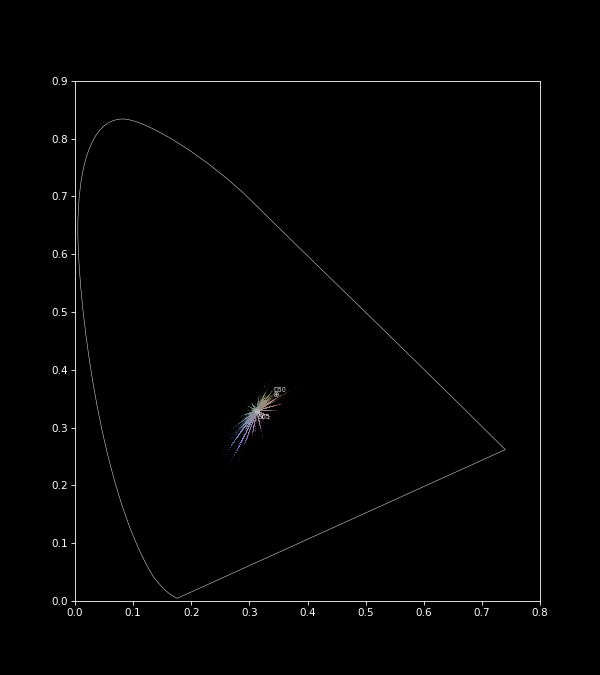

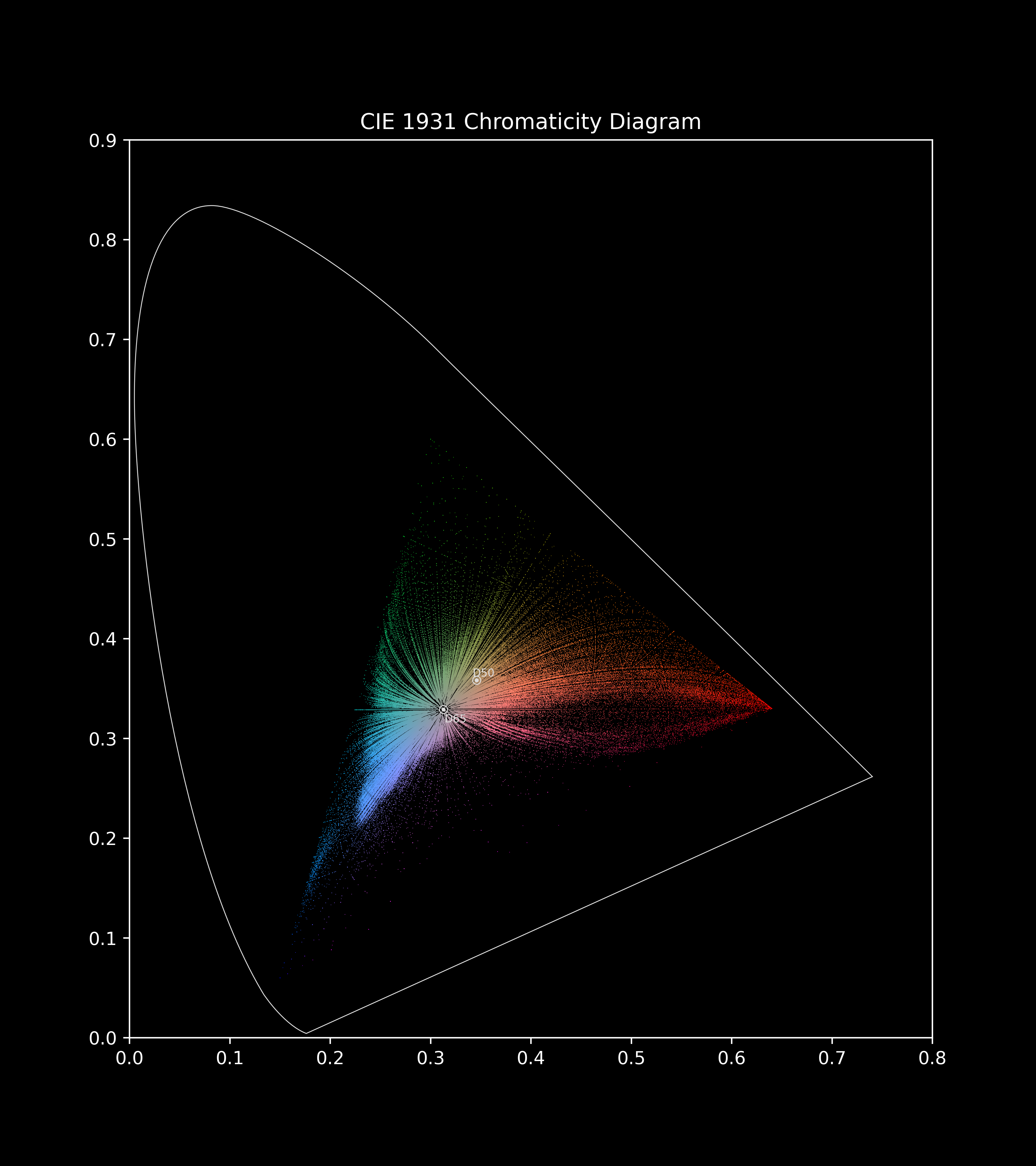

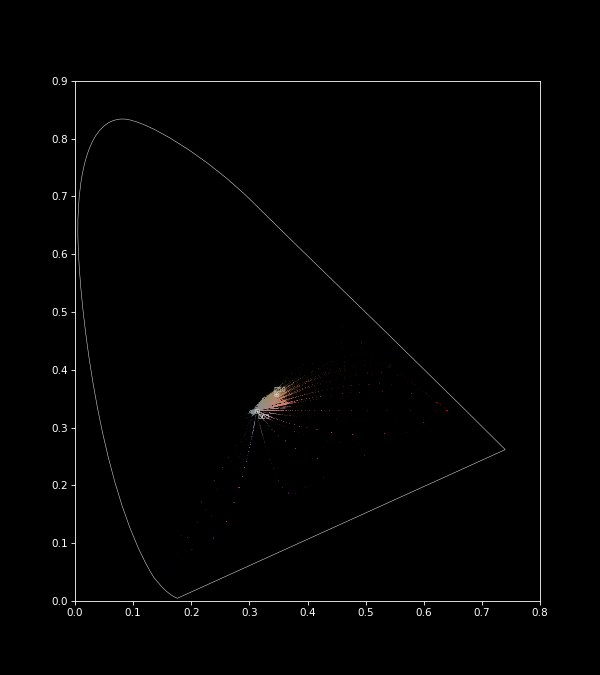

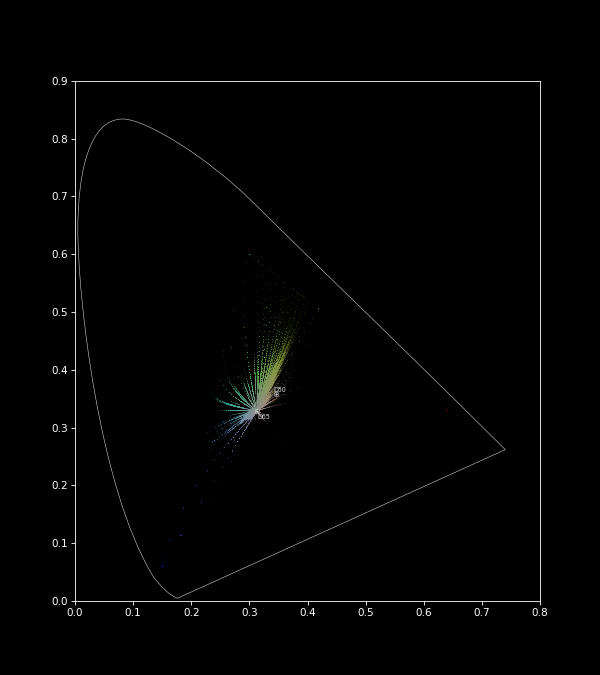

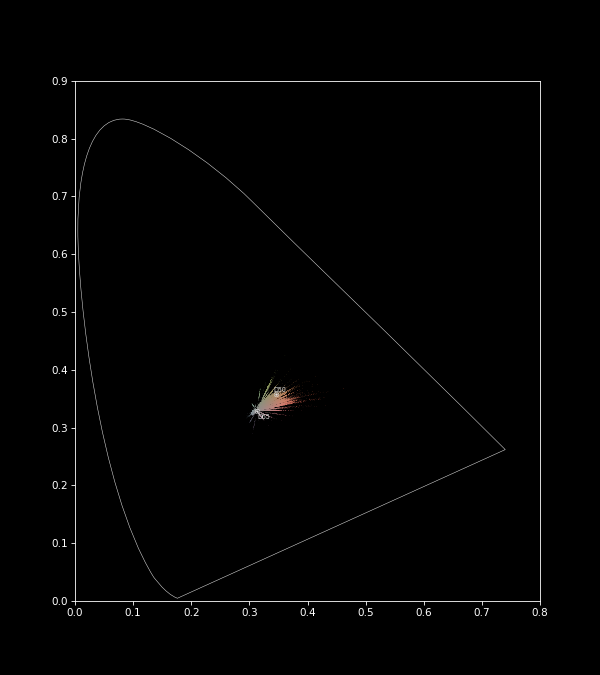

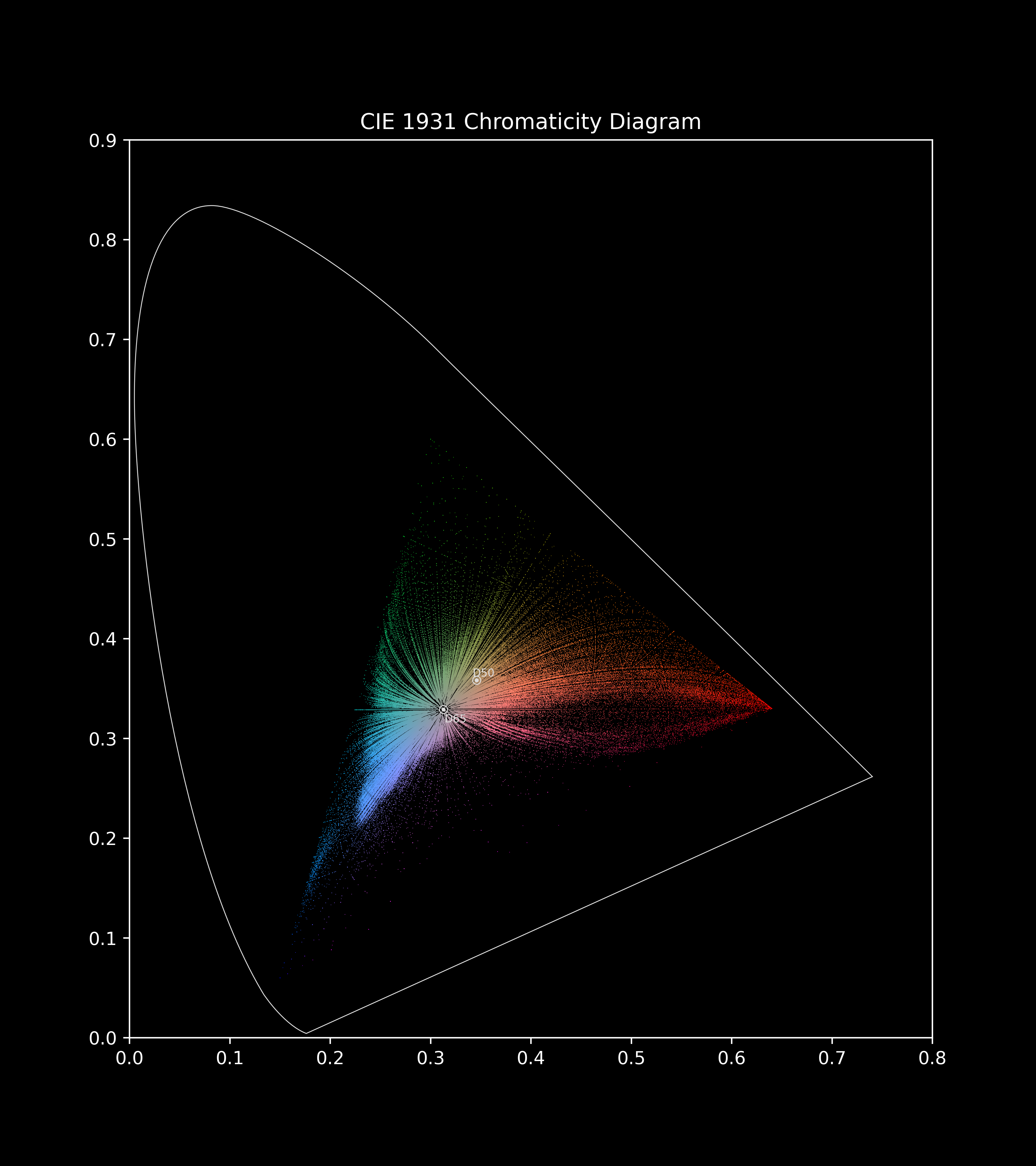

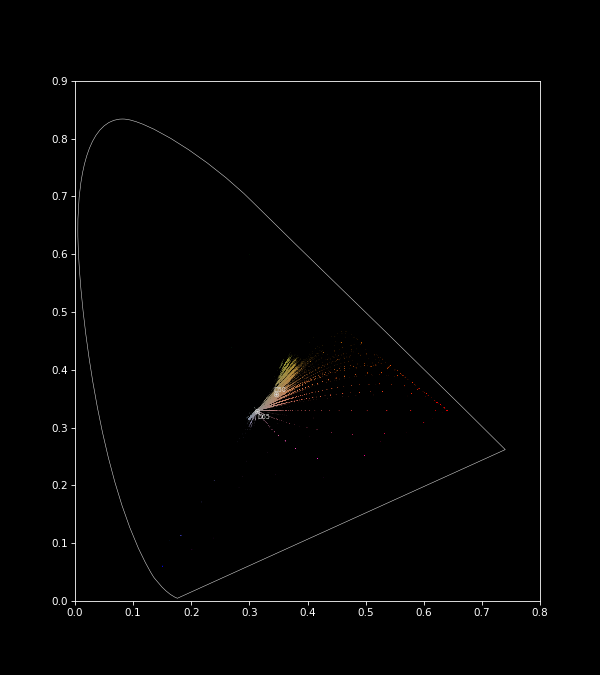

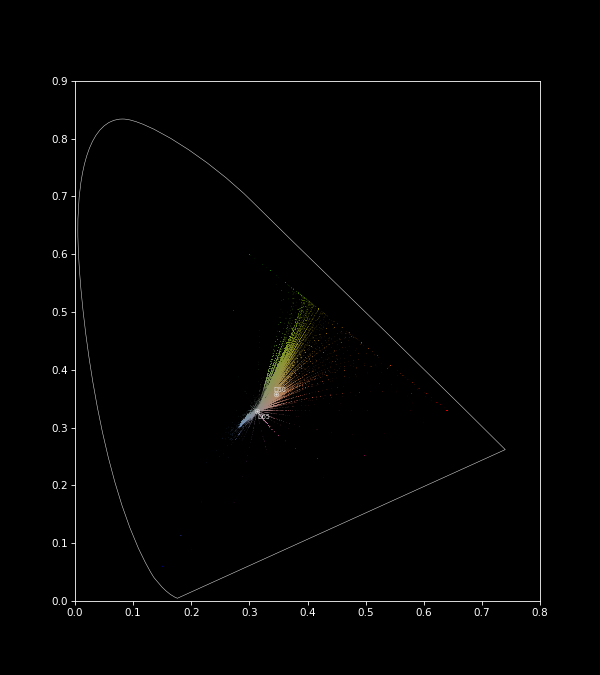

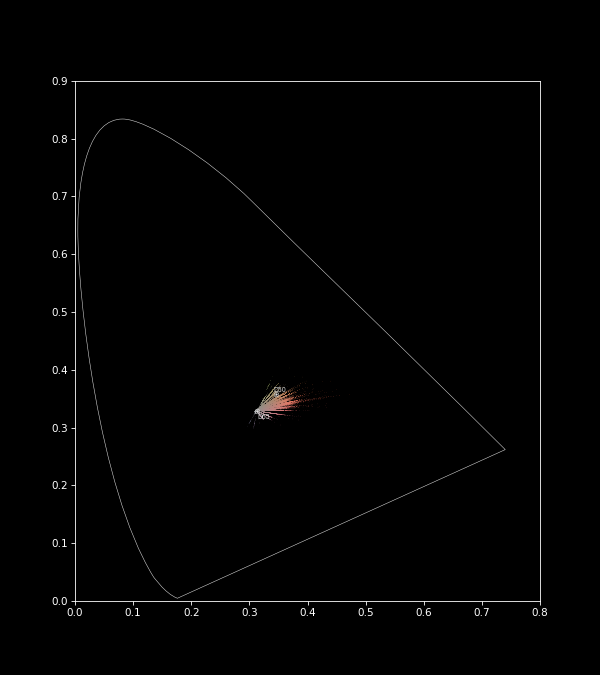

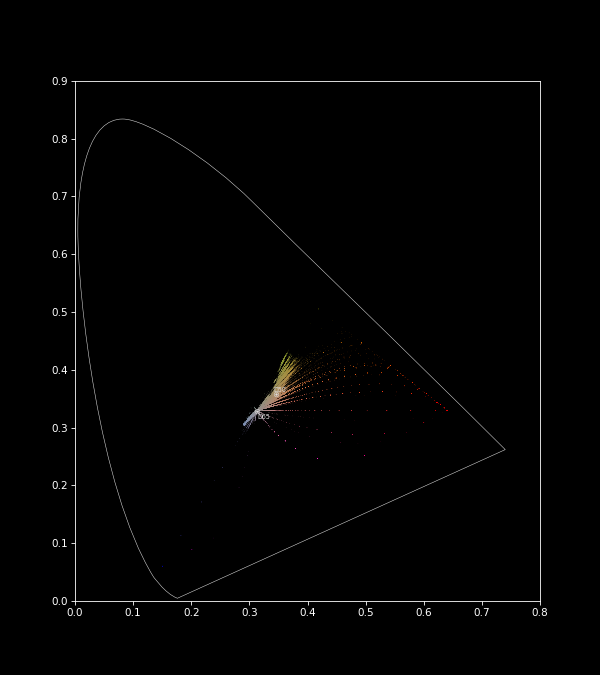

This means that significant differences in the reference images cannot be used, as illustrated below.

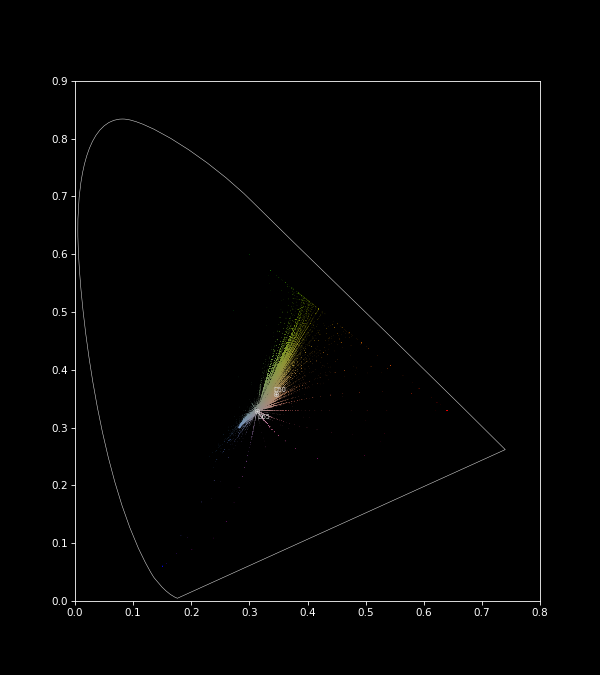

An interesting finding is that certain objects are colored even when they don’t appear on the refernce images, as long as those colors are present in the reference images. This suggests that instead of semantic to semantic matching between gray and reference image, semantic to color correspondence is learned (at least partially). For example, the sky is colored blue and the leaves green. The semantic matching takes place in feature space where the spatial information is degraded. See noise test vs gray test.

| Task | Image #1 | Image #2 | Image #3 | Reference |

|---|---|---|---|---|

| Recolor source |   |

|

|

|

| Task | Image #1 | Image #2 | Image #3 | Reference |

| Full correspondence |   |

|

|

|

| Partial source |   |

|

|

|

| Semantic correspondence strong |   |

|

|

|

| Semantic correspondence weak |   |

|

|

|

| Distractors |   |

|

|

|

| Contemporary |   |

|

|

|

| Mixed |   |

|

|

|

Additional Information

- Last updated: 11 April 2024 09:30

- GPU info: NVIDIA GeForce GTX 1080 Ti 11 GB, Compute Capability 6.1

- CUDA version: 11.7

- PyTorch version: 1.13.1